The title “Ethics of Facial Recognition Software” is a bit misleading because software in itself has no ethical norms. But software developers do, as do the code writers who work for them. So, their ethics when they write software is fair game. Perhaps “fair” is not achievable, so for this narrow purpose, I’ll evaluate whether what they do passes moral muster.

Facial recognition is almost ancient technology, dating to the 1960s with JFK and the civil rights movement. Woodrow Wilson Bledsoe[1] is widely recognized as its founder. But it languished for fifty years, coming back to life in the last decade. Today, investigators, cops, and other well-intentioned public servants are detecting faces in crowds and using the software to identify missing people. By missing, I mean not known, not in jail yet, or not found out. Hiding out is getting much harder to do.

The race is on for biometric innovation. All the usual suspects are vying for the golden ring—Google, Apple, Facebook, Amazon, and Microsoft (GAFAM) and too many more to count. As they sort out nostrils and ear lobes, the world awaits facial recognition as if it were sliced bread or talking to people miles away over wires strung from trees or poles.

But what of ethics? Will the searchers think about the ethical overtones as they search for criminals? As short ago as June 2020, there were reactions from three of America’s largest technology companies—“IBM, Amazon and Microsoft announced sweeping restrictions on their sale of facial recognition tools and called for federal regulation amid protests across the United States against police violence and racial profiling.”[2] They research and analyze both software and specific projects in artificial intelligence, image recognition, and face analysis. The American Civil Liberties Union and Mijente, an immigrant rights group, say the technology companies’ pledges have more to do with public relations at a time of heightened scrutiny of police powers than any serious ethical objections to deploying facial recognition as a whole.

“Facial recognition technology is so inherently destructive that the safest approach is to pull it out root and stem,” said Woody Hartzog, a professor of law and computer science at the Northeastern University School of Law.[3]

The market for facial recognition tools will likely double to $7 billion by 2024, according to a report published by Markets and Markets. Its use raises concerns in areas such as ethics and privacy, though, and there have been calls by politicians and civil rights agencies to safeguard against potential misuse of the technology.[4] The World Economic Forum released the first framework for the safe and trustworthy use of facial recognition technology. It has four elements:

- Define what constitutes the responsible use of facial recognition through the drafting of a set of principles for action. These principles focus on privacy, bias mitigation, the proportional use of the technology, accountability, and consent, right to accessibility, children’s rights and alternative options.

- Design best practices, to support product teams in the development of systems that are “responsible by design,” focusing on four main dimensions: justify the use of facial recognition, design a data plan that matches end-user characteristics, mitigate the risks of biases, and inform end-users.

- Assess to what extent the system designed is responsible, through an assessment questionnaire that describes what rules should be respected for each use case to comply with the principles for action.

- Validate compliance with the principle for action through the design of an audit framework by a trusted third party.[5]

Having written, interpreted and ligated ethical manuals for decades, the framework by the World Economic Forum is well intended, hopeful, and thought-provoking. Those characteristics often miss that there will be many bad actors at the table who will derail such haughty notions as justification, mitigation and information. Justice Robert Jackson famously said, “As to ethics, the parties seem to me as much on a parity as the pot and the kettle. But want of knowledge or innocent intent is not ordinarily available to diminish patent protection.”[6] We should add to his note that there might be no way to protect “us” from the inherent bias and danger in facial recognition in the wrong hands.

I am an author and a part-time lawyer with a focus on ethics and professional discipline. I teach creative writing and ethics to law students at Arizona State University. Read my bio.

If you have an important story you want told, you can commission me to write it for you. Learn how.

[1] Woodrow Wilson “Woody” Bledsoe was an American mathematician, computer scientist, and prominent educator. He is one of the founders of artificial intelligence, making early contributions in pattern recognition and automated theorem proving. https://en.wikipedia.org/wiki/Woody_Bledsoe

[2] Olivia Solon, June 22, 2020, https://www.nbcnews.com/tech/security/big-tech-juggles-ethical-pledges-facial-recognition-corporate-interests-n1231778

[3] Ibid.

[4] https://www.smartcitiesworld.net/news/news/wef-launches-framework-for-trustworthy-use-of-facial-recognition-technology-5081

[5] https://www.smartcitiesworld.net/news/news/wef-launches-framework-for-trustworthy-use-of-facial-recognition-technology-5081

[6] Mercoid Corporation v. Mid-Continent Co. 320 U.S. 661, 679 (1944).

I am an author and a part-time lawyer with a focus on ethics and professional discipline. I teach creative writing and ethics to law students at Arizona State University.

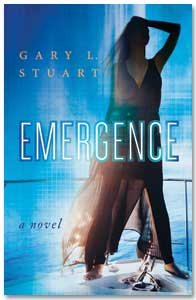

I am an author and a part-time lawyer with a focus on ethics and professional discipline. I teach creative writing and ethics to law students at Arizona State University.  My latest novel is Emergence, the sequel to Let’s Disappear.

My latest novel is Emergence, the sequel to Let’s Disappear.  If you have an important story you want told, you can commission me to write it for you.

If you have an important story you want told, you can commission me to write it for you.